Containers are a great way to build, ship and run applications anywhere, on-premises or in the cloud. When they’re used correctly, they improve the portability and operability of our workloads.

For production deployments involving multiple machines (for scalability or reliability reasons), it is fairly common to use an orchestrator like Kubernetes. The orchestrator can also manage the underlying infrastructure components, including network and storage.

Stateful components (like databases or message queues) are comparatively harder to deploy than their stateless counterparts, precisely because of these storage components.

In the specific case of Kubernetes, storage is materialized by volumes. There are many different sorts of volumes on Kubernetes: ephemeral volumes (like emptyDir), local persistent volumes (like hostPath), remote volumes (using NFS, iSCSI, or cloud-based disks or files)… There is also a distinction between static and dynamic provisioning of volumes.

Historically, the code to manage all these volumes was “in-tree”, which means in the main Kubernetes codebase; and Kubernetes supported a limited selection of volume types. Common volumes like NFS or iSCSI were supported “in-tree”, as well as the block volumes available on US cloud providers like AWS or Azure. To facilitate the maintenance of the code supporting these volumes, and to make it easier to add support for new types of volumes (like the ones you can find on Digital Ocean or Scaleway, for instance), Kubernetes moved to an “out-of-tree” system leveraging the Container Storage Interface, or CSI.

(Before CSI, there was also a system called “FlexVolumes”, but we’re not going to cover it here.)

At Enix, we help our customers to run containerized workloads in many different environments. Public cloud (e.g. AWS or OVH), private cloud (e.g. on our hosted Proxmox or OpenStack clusters), dedicated servers in your datacenters or ours… We regularly work with various network and storage equipment. In particular, we regularly work with Dot Hill storage appliances, and these appliances weren’t supported by Kubernetes. So we wrote a CSI plugin to support them.

In this article, we’ll explain what CSI is, what problem it solves, why and how we wrote a CSI plugin, and some of the challenges that we faced. Even if you don’t work with these storage appliances, we hope that this article will give you a good overview of the CSI ecosystem on Kubernetes.

What is Kubernetes CSI?

CSI (Container Storage Interface) is a standard to unify the dynamic provisionning of local or remote persistent volumes.

It has been created to solve storage challenges for stateful containerized workloads. It creates an interface between two actors:

- the Container Orchestrator (CO), for instance Kubernetes, Mesos, Nomad;

- the Storage Provider (SP), which manages the actual volumes. The goal of that interface is to provide storage where it’s needed (on the right nodes) in the required quantity and size. The interface thus can be implemented as a CO or a SP.

This is an example of what the CO can ask the SP to do:

- create a new empty volume with a specific size

- resize a volume

- snapshot a volume

- create a new volume from a snapshot

- delete a volume

- attach and mount a volume on a specific host

If you feel like reading the nitty-gritty details, you can check the CSI specification.

Our Kubernetes CSI plugin

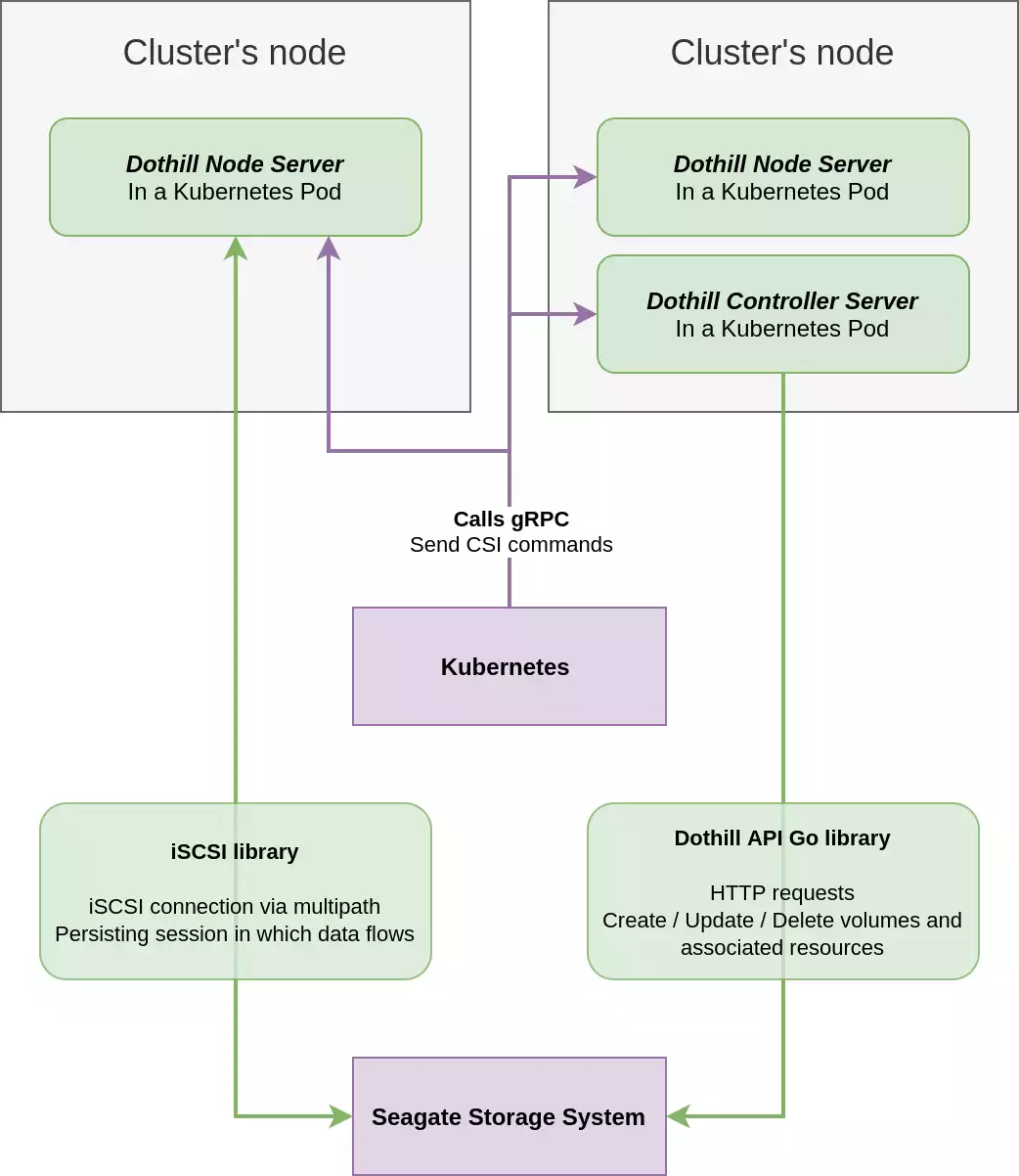

A CSI plugin consists in three parts.

- The storage system itself. This could be an appliance (like the Seagate / Dot Hill appliances that we’re working with) but it could also be a service with an API, for instance AWS EBS.

- The Controller Plugin. This is a gRPC service that manages volumes on the storage provider on behalf of the orchestrator.

- The Node Plugin. This is another gRPC service which takes care of mounting and unmounting volumes on nodes.

The storage appliance

In order to choose an appliance or a storage provider for a CSI plugin (in the case you’re not making it in the need of using a specific one), you need to look at various parameters. It can be about protocols, documentation, price-range, reliability and so on.

When at Enix we started thinking about developping a CSI driver, we wanted to make storage accessible for small business (as well as big ones) with on-premise requirements and running on Kubernetes. We found a storage model created originally by Dot Hill Systems (now part of Seagate) that met our requirements: entry-level prices (about 5k$ the unit) but reliable and performant, featuring two controllers enabling high-availability and the API documentation was good and complete. Before that, we found some interesting appliance but with few or no documentation, so this one was a very good candidate.

Concerning protocols, the appliance we choosed (and next ones from the same product line) was iSCSI compatible, which is great since being able to advertise a device over network was obviously a requirement because of the nature of the CSI specification. iSCSI is an acronym for Internet Small Computer System Interface and is the equivalent of the SCSI protocol (a set of standards for physically connecting and transferring data between computers and peripheral devices) but over the network.

The controller

The controller is in charge of managing volumes on the storage provider. It receives CSI commands via gRPC and translates them in API calls to the storage provider.

The implementation of CSI specification is quite easy since it only consists in making API calls to the well documented appliance we choosed. Also there isn’t a lot of functions to implement. The most simple implementation of a CSI driver may only include a few functions, and can be completed with some optional ones. The minimum being the following ones:

| CSI command | Description |

|---|---|

| CreateVolume | Create a volume |

| DeleteVolume | Delete a volume |

| ControllerPublishVolume | Map a volume on a host |

| ControllerUnpublishVolume | Unmap a volume from a host |

We implemented the API client in a separate project Dothill-api-go and then used it to implement CSI calls.

The node

The node is in charge of managing volumes on hosts, thus there is one instance of node per host (or per node of the cluster). Like the controller, it receives CSI commands via gRPC. Its implementation however is more complex since we had to work with low level stuff. iSCSI is fairly simple to use, via iscsiadm command, however we had to use multipathd for redundancy of controllers on the appliance and this one caused us some issues described later in this blogpost.

Luckily, we found a library called csi-lib-iscsi and used to manage iSCSI devices with multipathd provided by the Kubernetes team. We nevertheless had found some bugs in it, so we forked it, fixed bugs and improved the library to make it easier to use and more reliable.

How it works all together

In summary, the CO (Kubernetes in this schema, but it can be any CO implementing CSI) send CSI commands to node and controller servers. The controller uses the dothill-api-go library to send API calls to the appliance to create and map volumes. Nodes then attach and mount mapped devices on the host using the csi-lib-iscsi library, and Kubernetes bind mount the mounted path in containers requiring a volume.

Implementation choices

The CSI specification in itself is fairly simple and could theorically be easy to implement, but the reality of the field is really different. The hardware and low level layers involved in such a system add some significant challenges.

We faced some concurrency issues in both controller and node servers. In both cases, the appliance and the device part of the kernel don’t always allow us to put as much concurrency as we would want to since they were first built with the idea in mind that they will be used by a human, not a program attaching and removing tens of volumes in a few seconds.

Setuping a development cluster require some work to be done. Depending on your infrastruture and need, you may want like us at Enix to have your compute VM on a separate network than your storage, such a setup requires to correctly configure the network to allow nodes of your cluster to access the API and the iSCSI controllers from the appliance. Since low-level layers are involved, you may also want to have a multi-OS cluster in order to test portability.

Setuping integration tests can be pretty tough too. Indeed, since the plugin runs in a cluster, it would mean that we should have spawned one cluster per CI pipeline in order to test the plugin before releasing it.

As evoqued earlier, iSCSI and multipathd jointly with udev and the kernel itself can be pretty tough to tame. And this is the subject of the following part.

How battle tested solutions don’t always fit your needs

Using multipathd was very important to us to achieve high-availability. However we had some trouble using it, multipathd is operating at a quite low level thus it’s not always easy to understand what is going on.

To ensure that our plugin is working perfectly smoothly, we tested it a lot, in many different use cases. The main issue we faced was filesystem corruption (which is a huge one). There are several cases in which multipathd can map devices in a way that can produce corruption or at least looks like a corruption. We fixed bugs one by one in order to ensure we have a fully corruption free driver.

One way to produce corruption was when multipathd mapped two devices with different WWIDs (volume unique identifiers) together. We thus implemented a consistency check running before mounting and unmounting a device to make sure we will not corrupt our filesystems.

After all, the hardest part in implementing a CSI driver is not the CSI spec itself, but everything around that can be quite low-level.

Enix & Seagate collaboration on Kubernetes CSI plugin development

After some discussions between Enix and Seagate, we decided that we could collaborate together on this CSI subject. Seagate forked our san-iscsi-csi plugin as seagate-exos-x-csi.

As we provide Kubernetes expertise and infrastructure services to our european customers, we decided to work on a more generic san-iscsi-csi driver, applicable to other storage appliances running on Kubernetes. Our new goal isn’t anymore to target Dothill/Seagate appliances only, since Seagate already took the lead on this part. We also didn’t want to stop working on this project, so opening it to new appliances was the sweet spot for us.

We are proud and happy to say that we will continue to work together, Seagate as a global leader on the storage market compatible with Cloud Native architectures, Enix as hosting company specialized in DevOps and Cloud Native technologies.

We hope these CSI drivers will help you to manage your storage appliances with Kubernetes, and since it’s fully open-source we invite you to participate.

Do not miss our latest DevOps and Cloud Native blogposts! Follow Enix on Linkedin!